-

-

Notifications

You must be signed in to change notification settings - Fork 2.1k

Description

Describe the bug

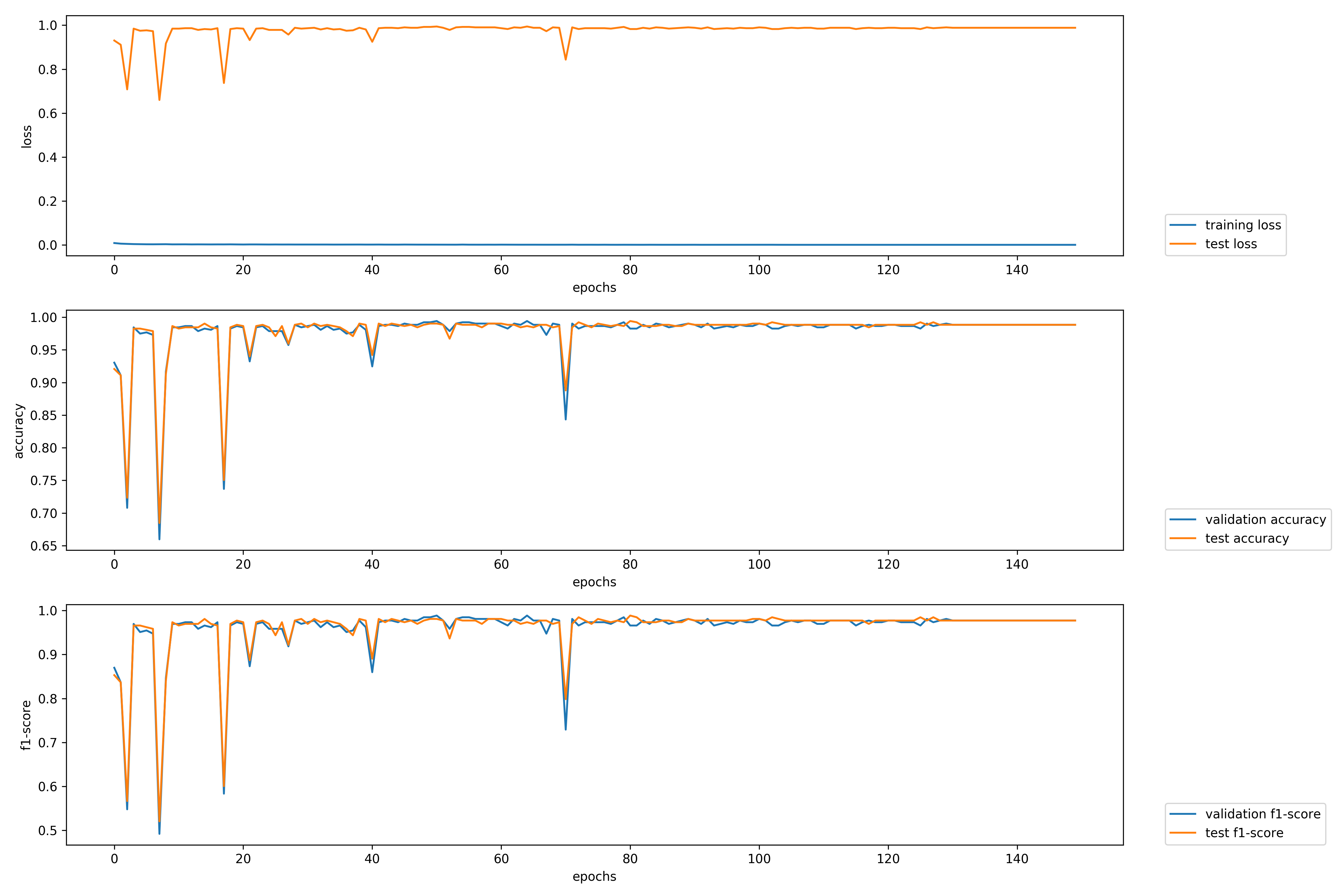

I am training a text classification model as described in the tutorial. On plotting the training process, I see the the accuracy & F1 score plots converge and stabilize over 50 epochs. Whereas The training and test loss have a wide gap though the stabilize.

On going over the loss.tsv it seemed that the test loss was not scaled as the training loss was.

On manually scaling the test loss, the new graph made sense.

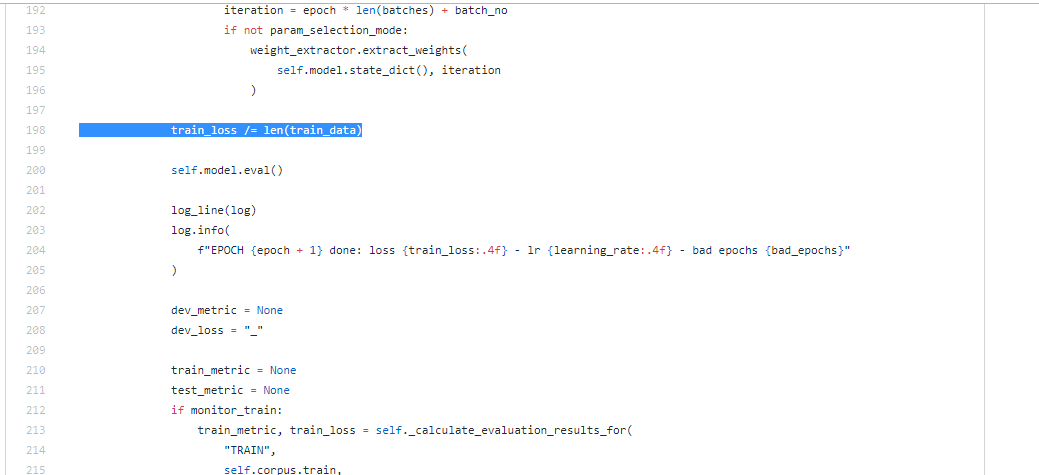

On inspecting the flair code, the script https://github.com/zalandoresearch/flair/blob/master/flair/trainers/trainer.py Line 198, the issues seems to make sense as the training loss has been scaled.

Can you please validate or clarify the same ?

Expected behavior

The graphs from Flair -

The data used is a sample IMDB data set.

The code was used from the Tutorial of Text Classification-

https://github.com/zalandoresearch/flair/blob/master/resources/docs/TUTORIAL_7_TRAINING_A_MODEL.md